Was working on a new VMM 2012 R2 install for a Windows Azure Pack POC and spent the better part of a day dealing with a failing VMM Service. SQL 2012 SP1 had been installed on the same server and during install, VMM was configured to run under the local SYSTEM account and use the local SQL instance. Installation completed successfully, but the VMM service would not start, logging the following errors in the Application log in Event Viewer:

Log Name: Application

Source: .NET Runtime

Date: 12/31/2013 12:43:27 PM

Event ID: 1026

Task Category: None

Level: Error

Keywords: Classic

User: N/A

Computer: AZPK01

Description:

Application: vmmservice.exe

Framework Version: v4.0.30319

Description: The process was terminated due to an unhandled exception.

Exception Info: System.AggregateException

Stack:

at Microsoft.VirtualManager.Engine.VirtualManagerService.WaitForStartupTasks()

at Microsoft.VirtualManager.Engine.VirtualManagerService.TimeStartupMethod(System.String, TimedStartupMethod)

at Microsoft.VirtualManager.Engine.VirtualManagerService.ExecuteRealEngineStartup()

at Microsoft.VirtualManager.Engine.VirtualManagerService.TryStart(System.Object)

at System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

at System.Threading.ExecutionContext.Run(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

at System.Threading.TimerQueueTimer.CallCallback()

at System.Threading.TimerQueueTimer.Fire()

at System.Threading.TimerQueue.FireNextTimers()

Log Name: Application

Source: Application Error

Date: 12/31/2013 12:43:28 PM

Event ID: 1000

Task Category: (100)

Level: Error

Keywords: Classic

User: N/A

Computer: AZPK01

Description:

Faulting application name: vmmservice.exe, version: 3.2.7510.0, time stamp: 0x522d2a8a

Faulting module name: KERNELBASE.dll, version: 6.3.9600.16408, time stamp: 0x523d557d

Exception code: 0xe0434352

Fault offset: 0x000000000000ab78

Faulting process id: 0x10ac

Faulting application start time: 0x01cf064fc9e2947a

Faulting application path: C:\Program Files\Microsoft System Center 2012 R2\Virtual Machine Manager\Bin\vmmservice.exe

Faulting module path: C:\windows\system32\KERNELBASE.dll

Report Id: 0e0178f3-7243-11e3-80bb-001dd8b71c66

Faulting package full name:

Faulting package-relative application ID:

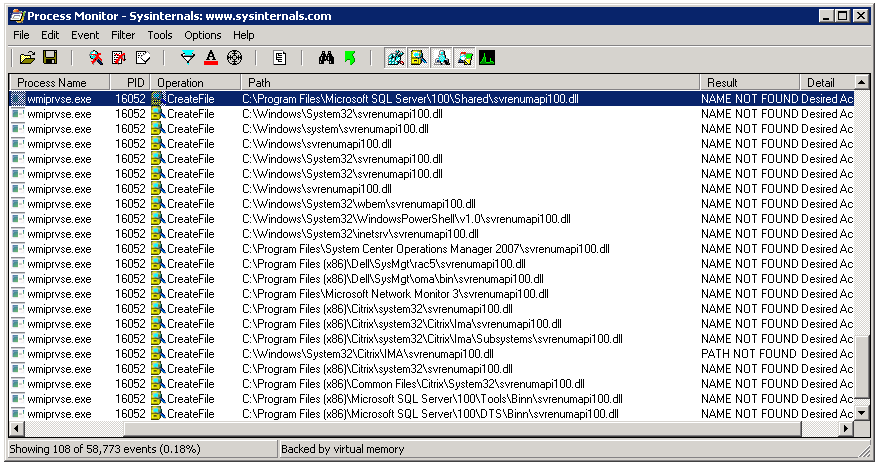

I attempted re-installing VMM 2012 R2 and selected a domain account during installation, but had the same result. I enabled VMM Tracing to collect debug logging and was seeing various SQL exceptions:

[0]0BAC.06EC::2013-12-31 12:46:04.590 [Microsoft-VirtualMachineManager-Debug]4,2,Catalog.cs,1077,SqlException [ex#4f] caught by scope.Complete !!! (catch SqlException) [[(SqlException#62f6e9) System.Data.SqlClient.SqlException (0x80131904): Could not obtain information about Windows NT group/user ‘DOMAIN\jeff’, error code 0x5.

I was finally able to find a helpful error message in the standard VMM logs located under C:\ProgramData\VMMLogs\SCVMM.\report.txt (probably should have looked their first):

System.AggregateException: One or more errors occurred. —> Microsoft.VirtualManager.DB.CarmineSqlException: The SQL Server service account does not have permission to access Active Directory Domain Services (AD DS).

Ensure that the SQL Server service is running under a domain account or a computer account that has permission to access AD DS. For more information, see “Some applications and APIs require access to authorization information on account objects” in the Microsoft Knowledge Base at http://go.microsoft.com/fwlink/?LinkId=121054.

My local SQL instance was configured to run under a local user account, not a domain account. I re-checked the VMM installation requirements, and this requirement is not documented anywhere. Sure enough, once I reconfigured SQL to run as a domain account (also had to fix a SPN issue: http://softwarelounge.co.uk/archives/3191) and restarted the SQL service, the VMM service started successfully.