In early 2015, Google announced they would be blanketing Charlotte, NC in fiber to support their Google Fiber internet and TV service. Since moving to Charlotte, NC, I had been a Time Warner Cable (now Charter) internet customer, and a happy Dish Network customer for the last 15 years. Having moved from New England, I desperately needed to be able to watch the Red Sox and Bruins, something being a Dish “mover” allowed me to do. Working from home, it was imperative that I had solid internet service, and the combined cost of Time Warner Cable’s internet service and Dish Network had me thinking about making the switch when Google Fiber became available. Within a month of Google’s announcement, Time Warner announced the launch of their MAXX service in my area bumping speeds to 300/20 for which I promptly signed up. And, not surprisingly, AT&T also ran their Fiber service shortly after Google Fiber was installed in our area.

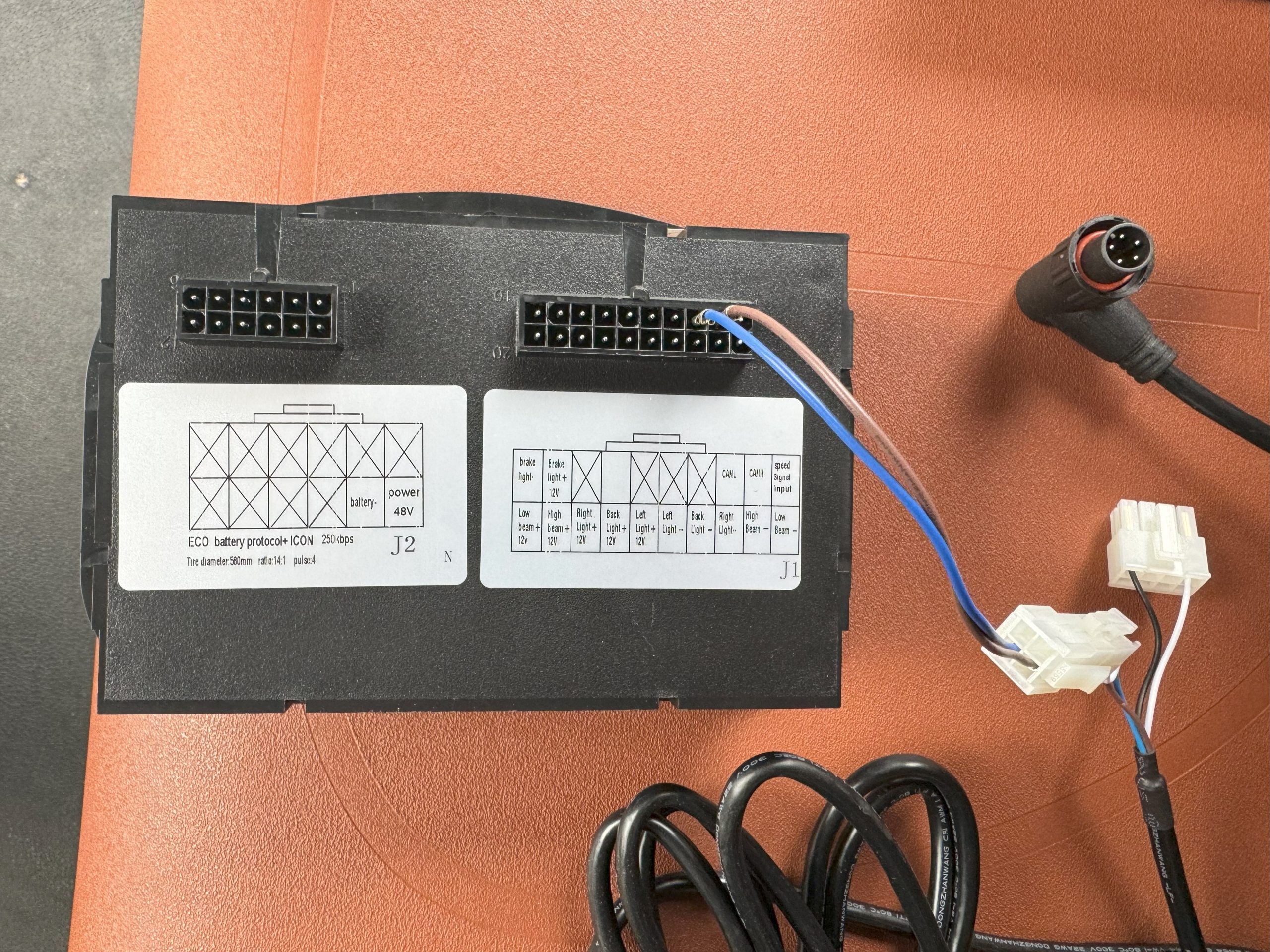

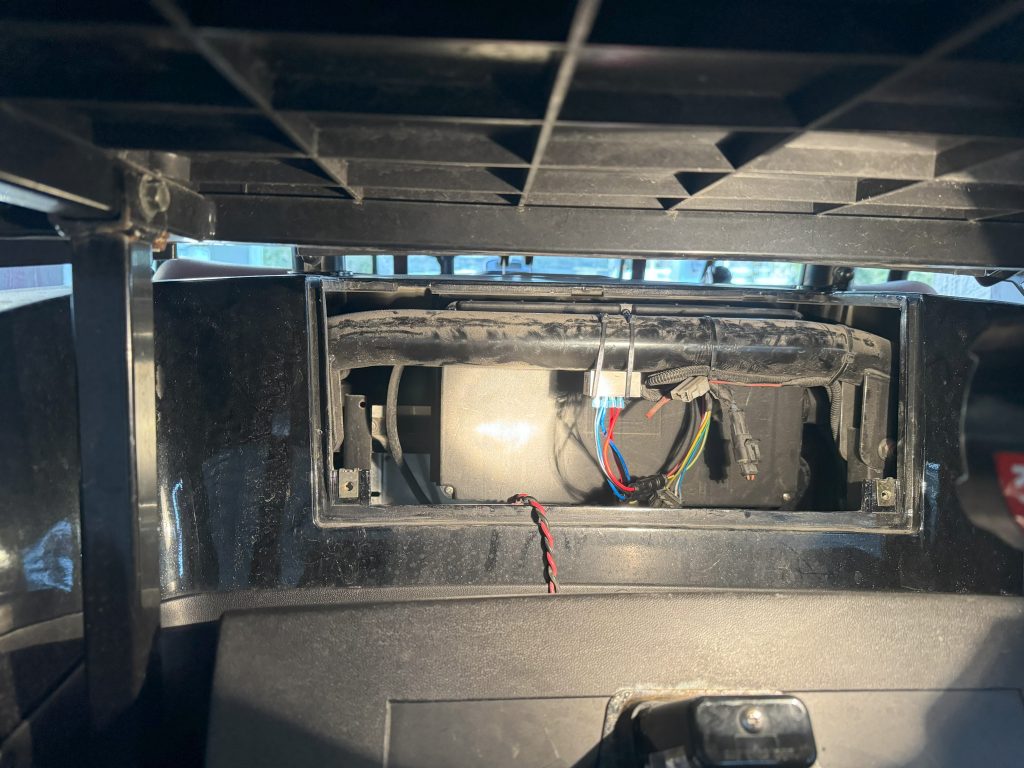

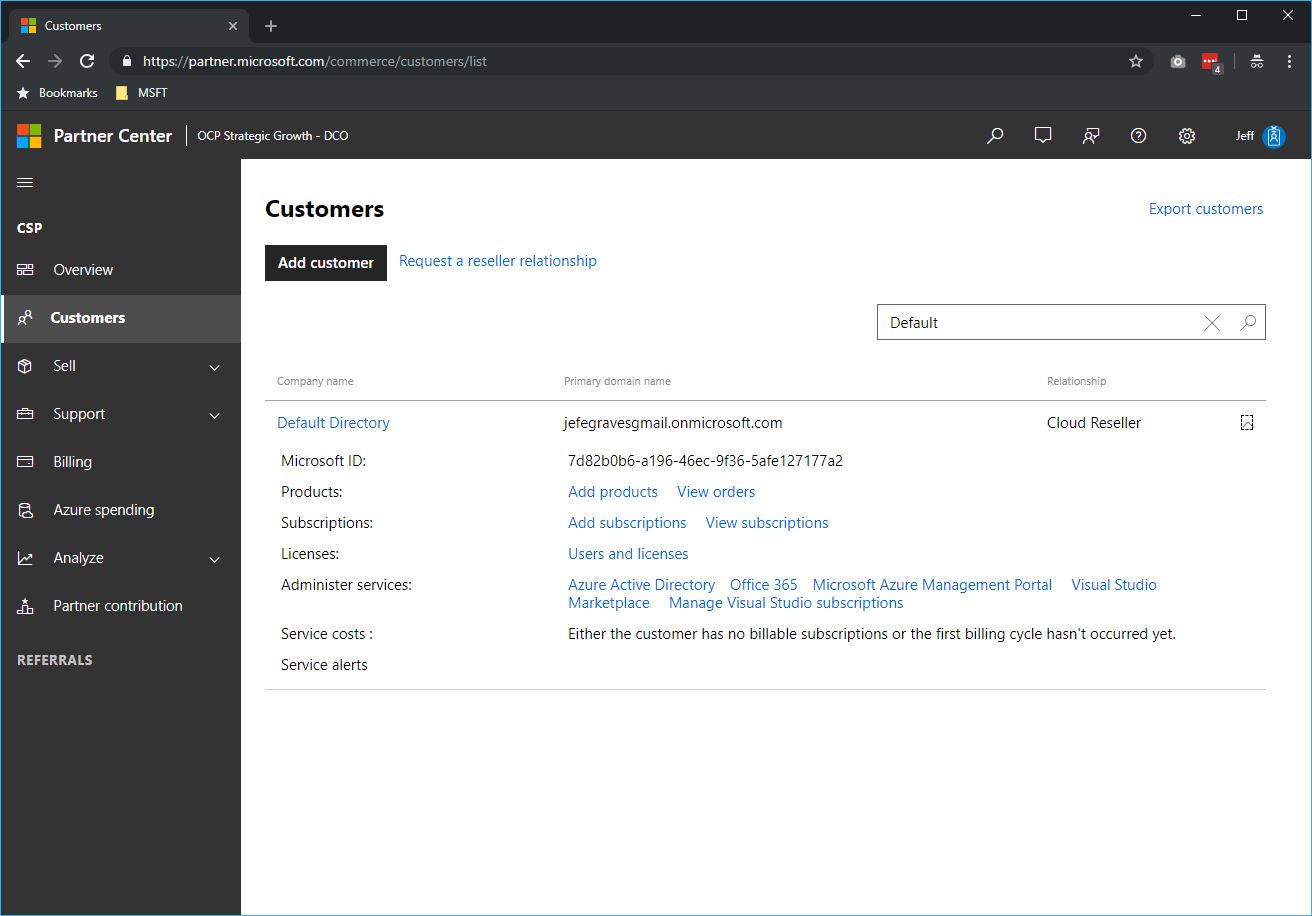

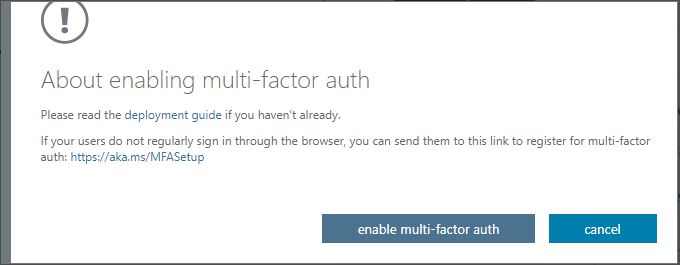

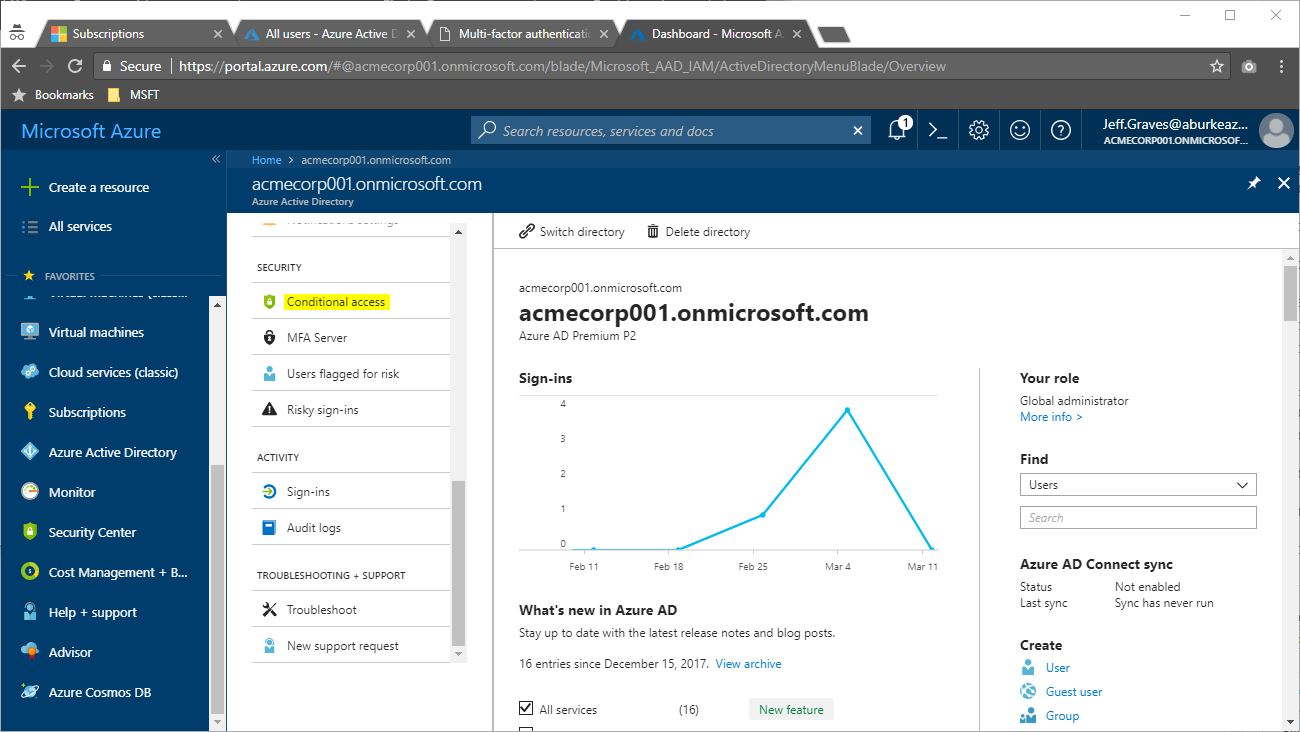

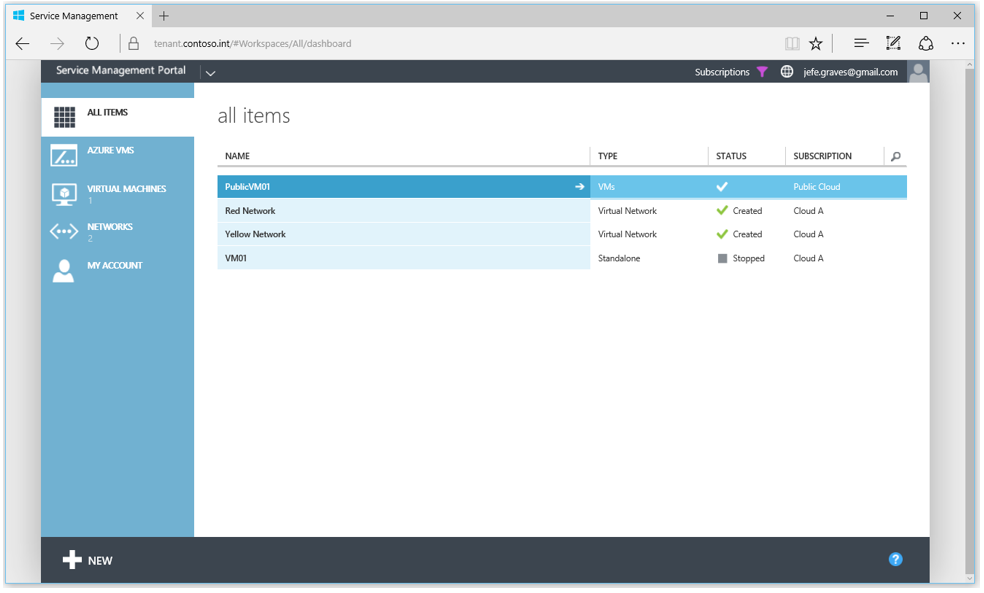

Construction made quite a mess of the neighborhood – we still have paint from the utility labeling throughout the development – and it was almost a year that we had to wait between when they buried the cable, and when they announced service availability. The day Google announced they’d be accepting new installations, I signed up for installation of both TV and internet service. Soon after, a team came and installed the network demarcation point (NDP). It was then another few weeks before they started scheduling installations, but I was able to get the installation scheduled on the day after they started in my area. Installation was fairly smooth – the installer placed this fiber jack in my home office next to the incoming RG6 cable from TWC. He also ran a new RG6 cable back to the TV distribution panel outside the house for TV service.

Google Fiber Jack, RG6 run to TV panel, old TWC connection

A CAT5e cable connects the fiber jack to the Network+ Box which has RG6 output to the TV boxes at each TV location (MoCA). A straight RG6 run is necessary to each TV box – no splitters after the TV distribution panel. The Network+ Box provides DVR storage and acts as a wireless router, and each TV box can extend the wireless network for larger homes. You can find specifications about the devices here.

Service Review

TV Service

I loved the interface on my Dish Network ViP 722k. The guide was clean and easy to understand, allowed for customization, and had quick access to all the menu’s I used. I absolutely despised the Time Warner Cable interface and guide and always found it very difficult to use anytime I visited a friend’s house. In fact, we have Charter Spectrum service at a vacation rental property with the same interface, and it’s terrible. Switching to a new interface was going to be a challenge, but after a day or two using Google’s latest interface for Fiber TV, I like it at least as much as Dish Network’s. There is still some small room for improvement, but overall, it’s easy to understand and the guide works well. The interface is quick to load after powering up and shows pictures shared via Google+ as a screen saver when not in use. The learning remote is a combination Bluetooth and IR transmitter, with very basic TV controls (on/off, volume, input) – it does not have additional device modes, but it was able to learn the IR codes to control the volume on my Samsung sound bar.

The Network+ Box has an embedded 2TB hard disk that can store up to 500 hours of programming and enables watching or recording of up to 8 shows at once. The same basic DVR options for setting scheduled or one-time recordings are present as with my Dish Network ViP 722k and with 8 streams, setting buffers doesn’t cause conflicts.

While not as common as the cable providers would have you believe, my Dish Network service did cut out from time to time in the absolute worst weather, but I no longer have to worry about this with Google Fiber. The HD picture quality is outstanding on both 52″ 1080p and 32″ 720p TV’s though it was with Dish Network as well. I’ve had no stuttering or picture issues with the IPTV – changing stations is as fast as cable or satellite.

I had the reasonably priced Dish Network Top 200 package while Google has a single TV service tier that includes all of those channels, plus some additional I did not have previously. Google Fiber’s on-demand selection is excellent and priced about the same as what I had with Dish Network and Charter Spectrum. As with other services, you’ll pay for additional TV’s and premium channel add-ons but there’s no additional fee for DVR service – it’s included.

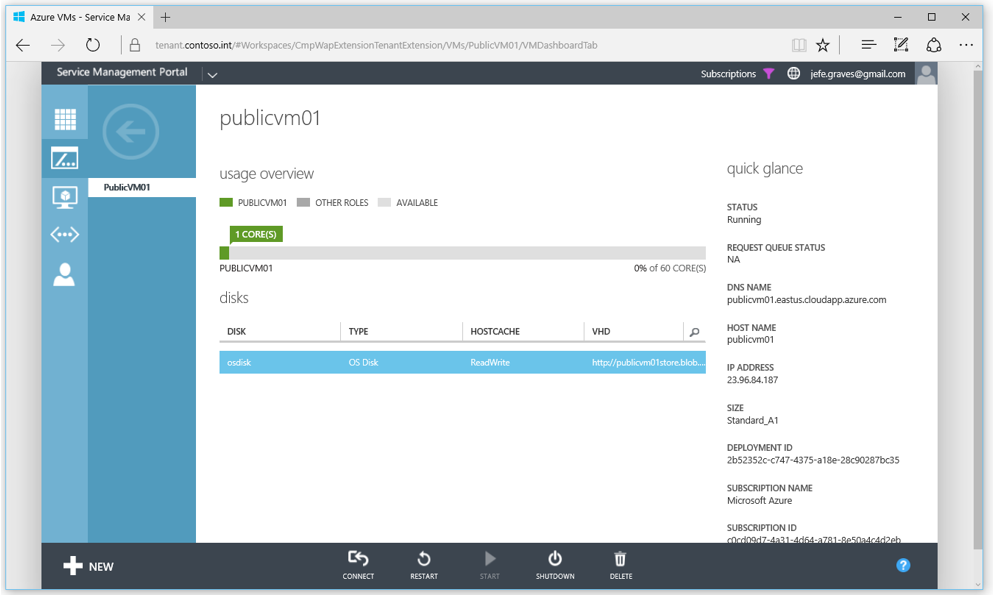

Internet Service

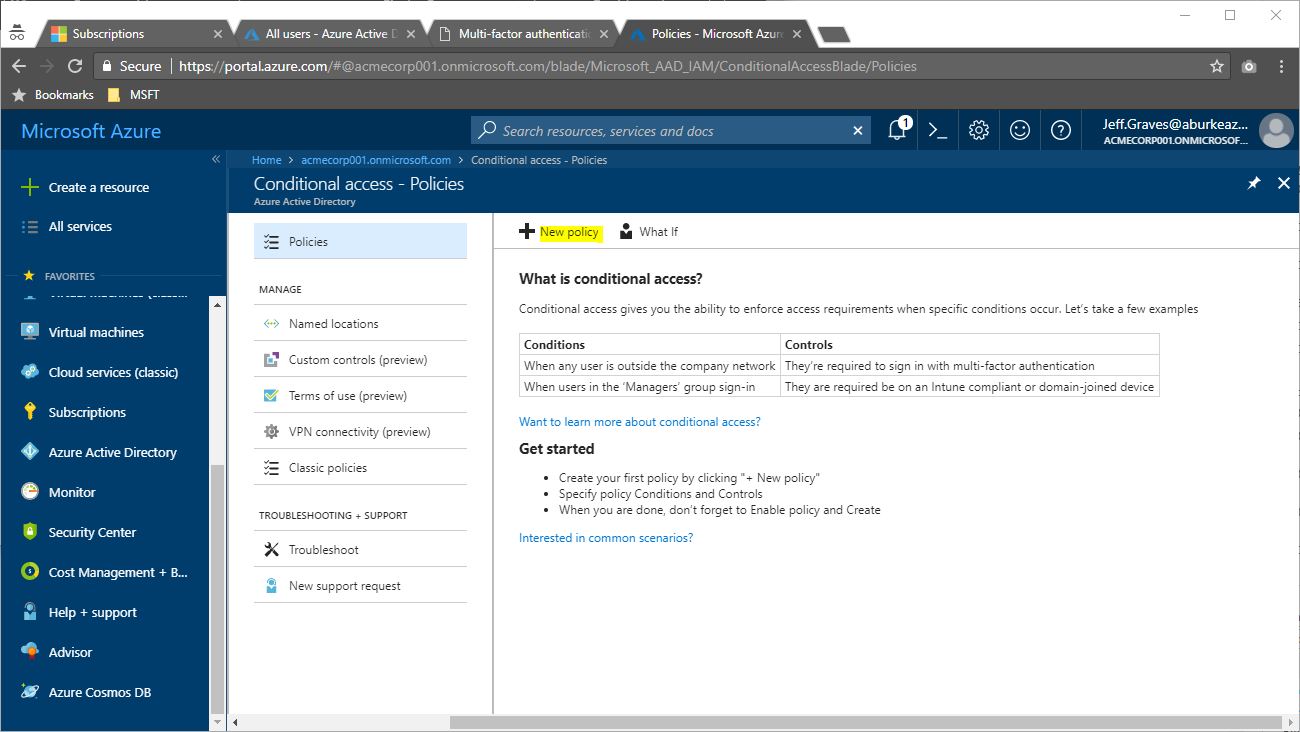

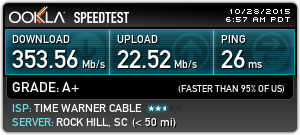

Having worked from home for the last 10 years, I’ve become extremely dependent on fast, reliable internet service. While Time Warner Cable MAXX was decent, I did have several service interruptions and issues that required visits to replace modems or outside cables over the years. On average, I’d say there was about a service interruption every 3-6 months. Time Warner Cable speeds were always as advertised, and sometimes even faster. Latency hovered between 20-30ms for most major sites with Time Warner Cable and is slightly better at 10-20ms with Google Fiber.

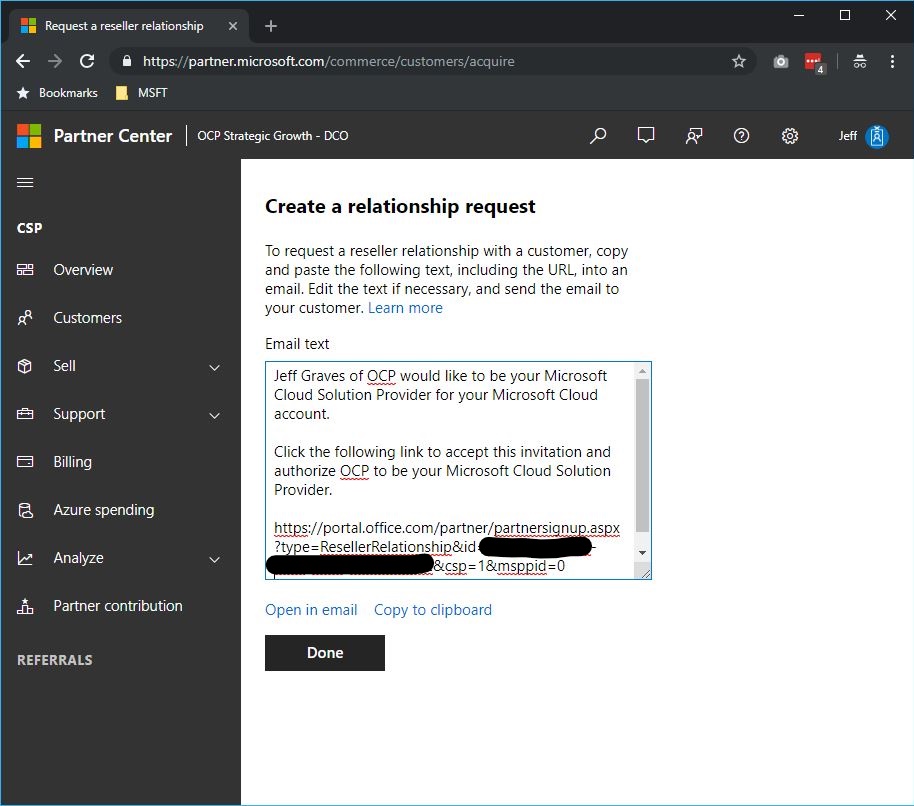

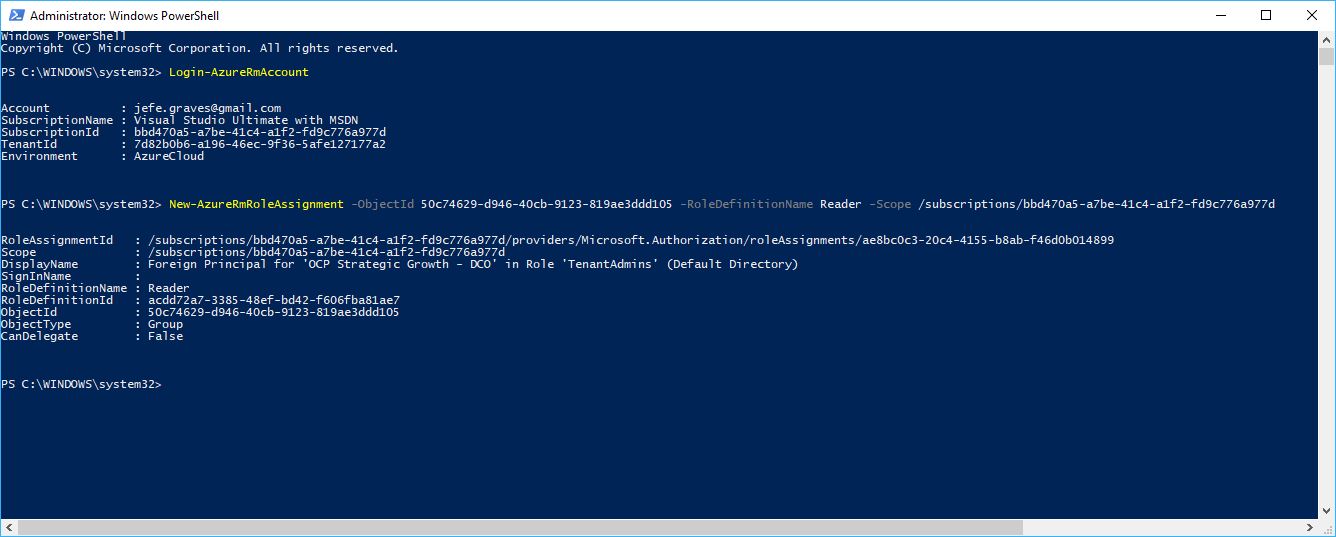

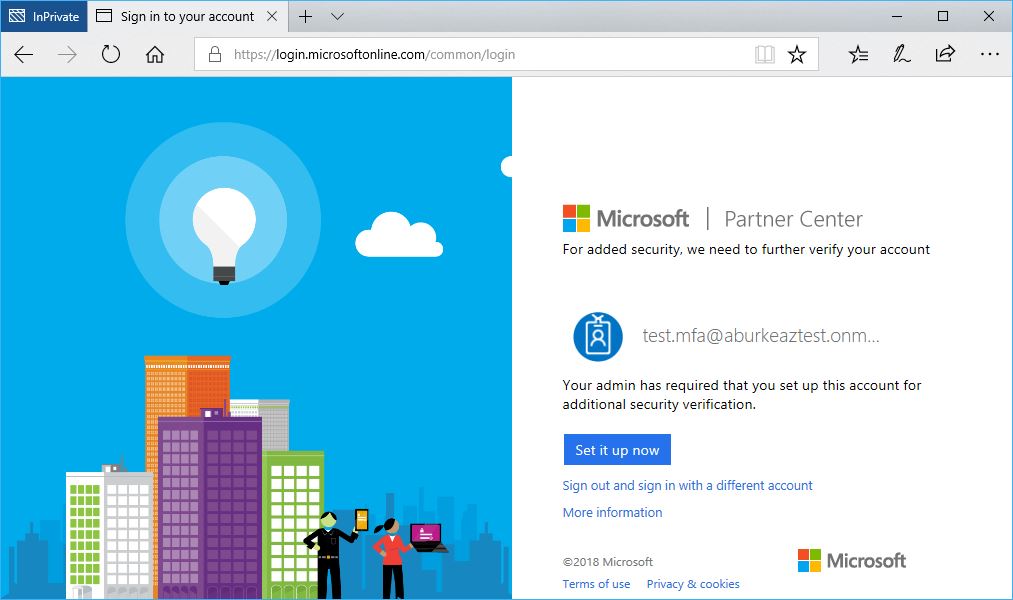

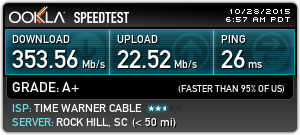

TWC Speed Test (300/20)

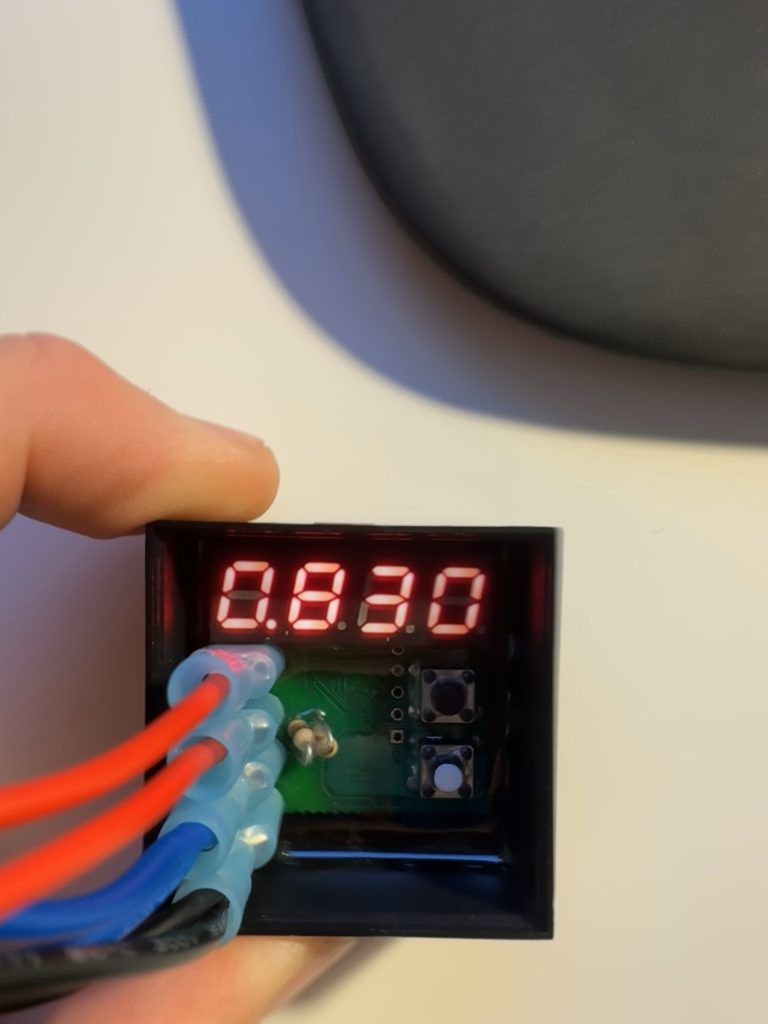

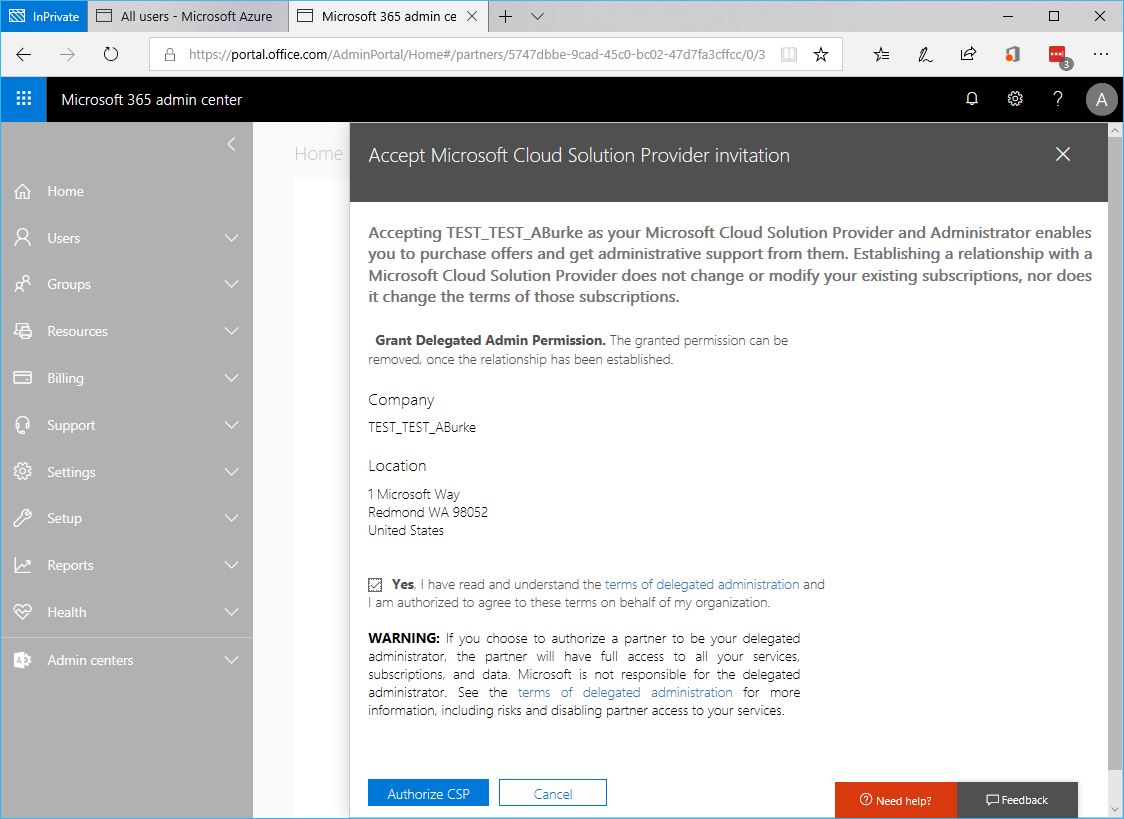

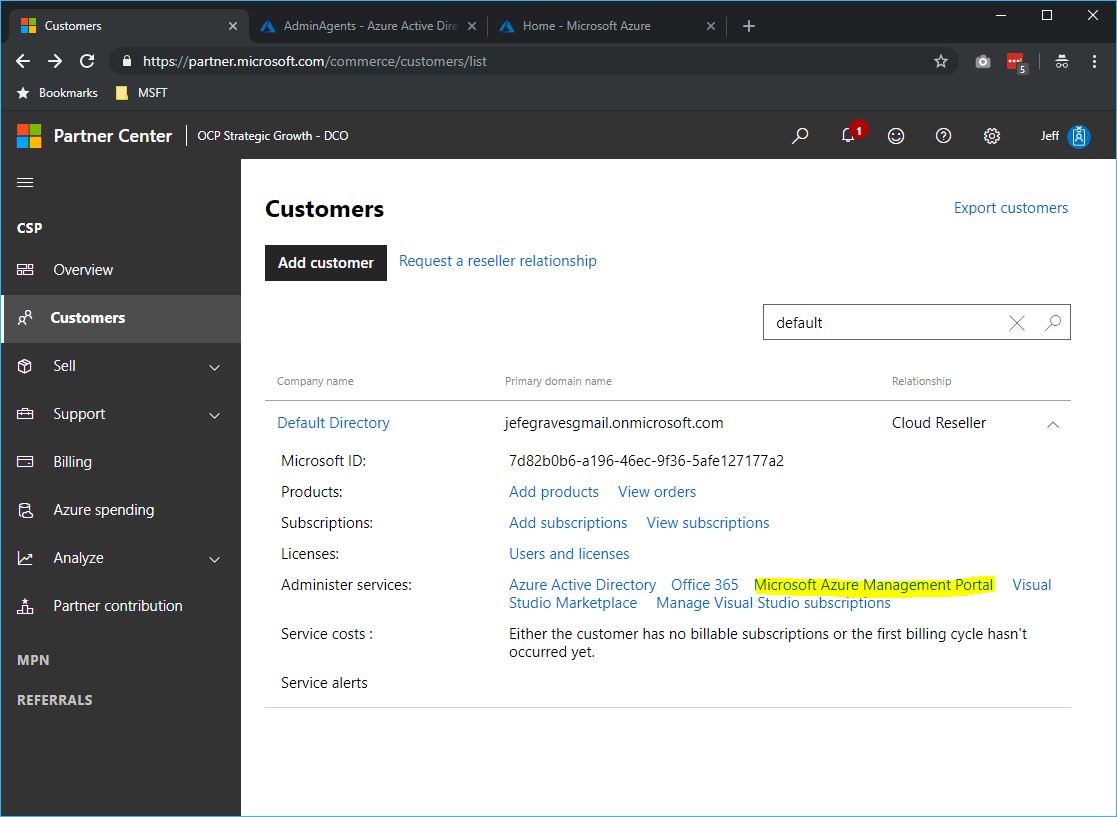

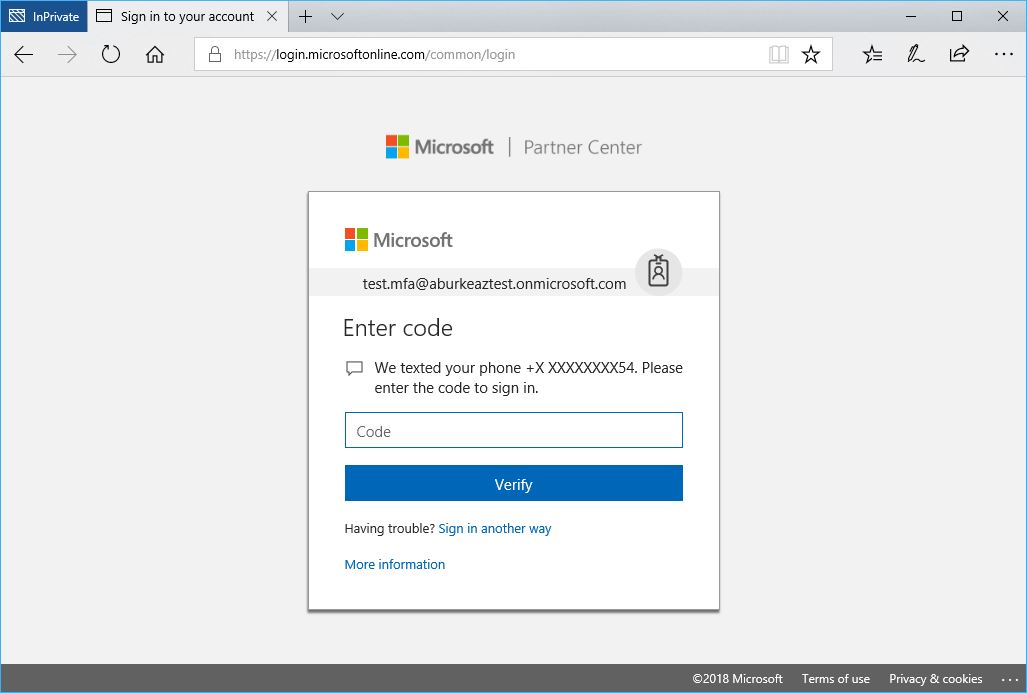

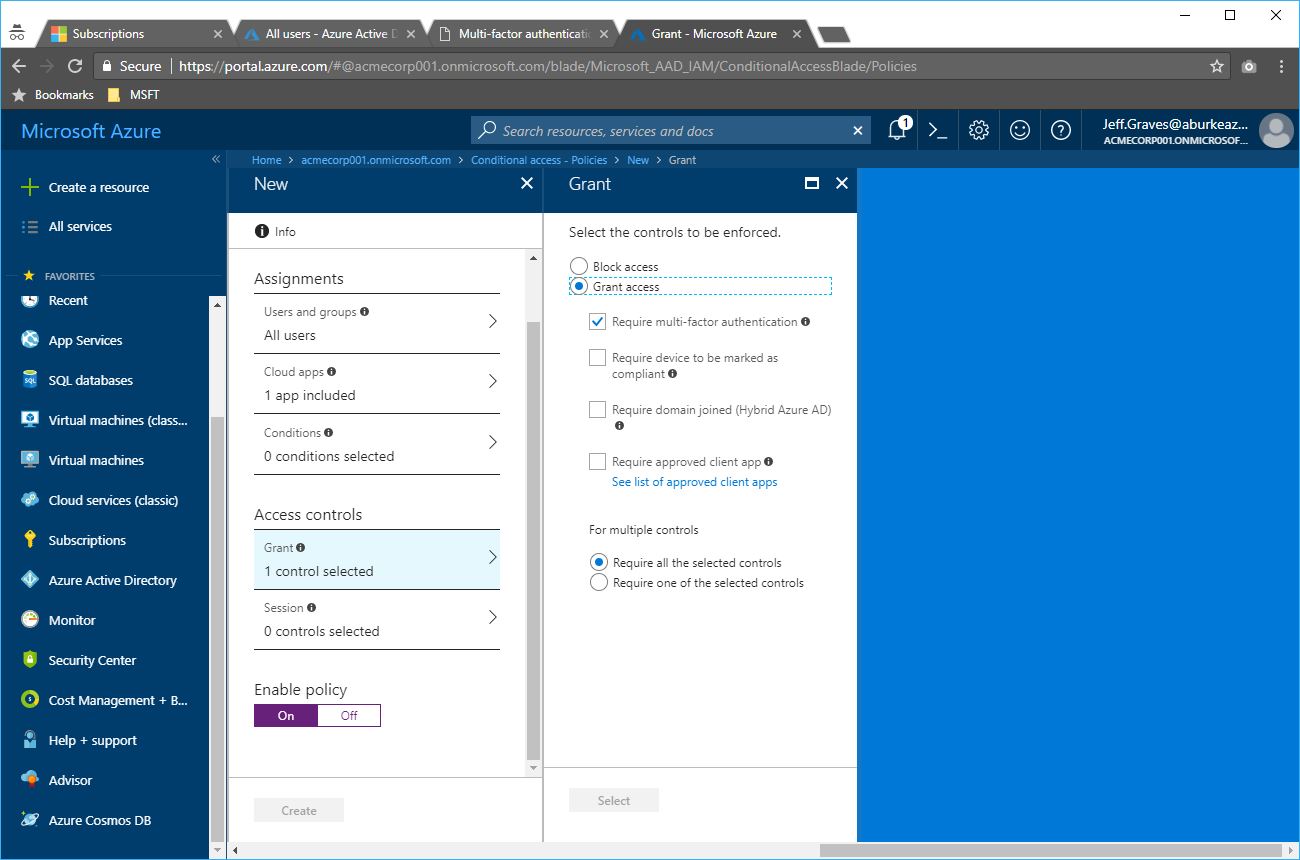

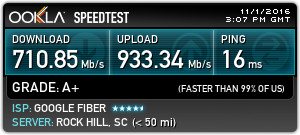

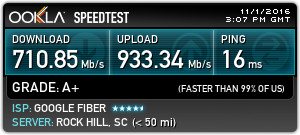

Google Fiber Speed Test (1000/1000)

The IPTV service uses some of that bandwidth, so you’ll have a hard time getting the full 1Gbps in a speed test, but it lives up to the hype. Except for later that day of installation which the tech had warned me about, I have not had a single service interruption with Google Fiber. Additionally, Google Fiber is fully IPv6 enabled which my Time Warner Cable service was IPv4 only.

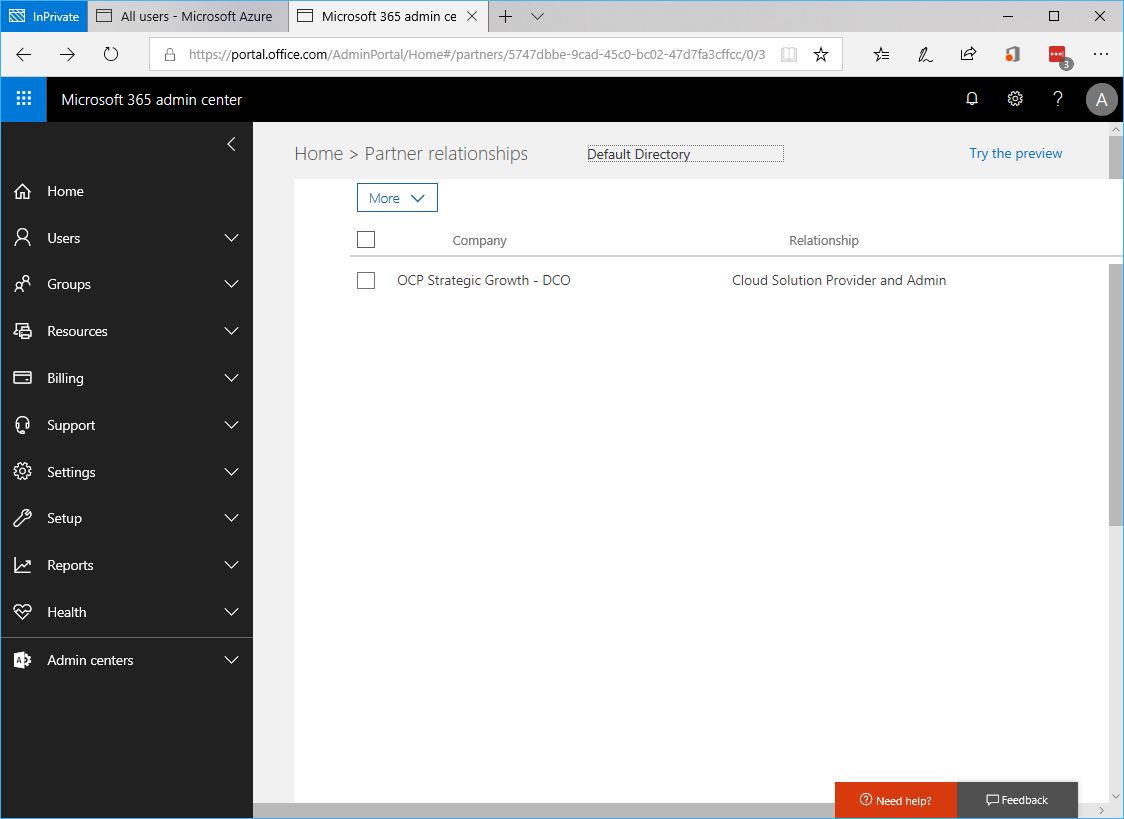

The configuration of the Network+ Box is done via a web service: http://fiber.google.com. I had been using an ASUS RT-AC88U router connected to a Motorola SURFboard SB6141 cable modem which allowed for a variety of advanced networking features. The Google Network+ Box supports configuration of DHCP settings, basic wifi settings, port forwarding and dynamic DNS, but lacks any QoS, VPN or other advanced configuration. The Network+ Box has four 1Gbps ports and supports 2.4GHz 802.11b/g/n and 5GHz 802.11a/n/ac. The TV boxes support the same wireless protocols and have a single 1Gbps port for nearby devices.

Cost

To recap, I had the Time Warner Cable MAXX service which was running about $112/mo with taxes modem fees, and the Dish Network Top 200 package with a ViP 722k DVR that I had purchased outright bringing my monthly bill to just under $100/mo. The Google Fiber 1000 + TV package includes 1000 Mbps internet, 220+ channels with HD & DVR ($130/mo) and I’ve added a 2nd TV box ($5/mo) which brings my bill to $140/mo with taxes. I have lost access to NESN though, so I’m forced to catch the Red Sox and Bruins when they’re on ESPN, MLBTV, NBCSN and NHLN. Overall, I’m very happy with the switch and would definitely recommend Google Fiber TV and Internet service.