Load balancing is critical for any highly available application. In the case of websites, a webfarm fronted by a load balancer can help distribute the load across multiple servers to increase scale and ensure that your application remains online during planned maintenance or in the event of a server or application failure on a particular node. Microsoft provides a free IIS extension called Application Request Routing that can perform load balancing of HTTP and HTTP traffic. At OrcsWeb, we use a cluster of ARR servers to load balance our production sites.

There’s plenty of resource available that describe how Application Request Routing works, so I won’t go into detail about it here, but how do you troubleshoot when something goes wrong? One of the more common errors that can be generated by ARR is the 502 error code. There are two substatus codes: 502.3 and 502.4.

The 502.4 error is considerably easier to troubleshoot as it generally means that there were no available content nodes to route the request. This likely occurs when you have a health check configured for the content nodes, and it is failing for all of them – thus, there are no healthy content nodes to which ARR can route the request. Obviously, at this point, the easiest solution is to fix whatever’s causing the health check to fail on the content nodes. Additionally, there’s the concept of minimum servers in ARR. This value can help prevent a health check from taking too many nodes out of rotation. Setting this to at least 1 which ensure that users don’t receive a 502.4 error (though they may still see errors returned by the backend content node).

The 502.3 error can be a little more difficult to troubleshoot. It effectively means there was a communication issue between the ARR node and the content node. Most times it is a timeout due to a long running request on the content node. This is easy to spot by looking at the web logs. I recommend using LogParser to analyze the web logs and looking for any request with a time-taken value that exceeds the proxy timeout setting configured for the webfarm. You can either increase the value of the proxy timeout, or troubleshoot the web application to find out why the request is taking so long to process. Replace W3SVC0 with the site id of your website and replace *.log with the specific name of a log file if you web logs are large to help speed up processing:

LogParser.exe “select date, c-ip, cs-method, cs-uri-stem, cs-uri-query, sc-status, sc-substatus, time-taken from C:\inetpub\logs\logfiles\w3svc0\*.log where time-taken > 25000” -i:IISW3C -o:DATAGRID

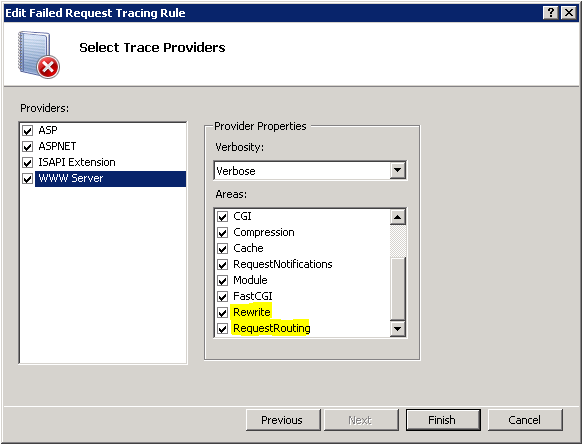

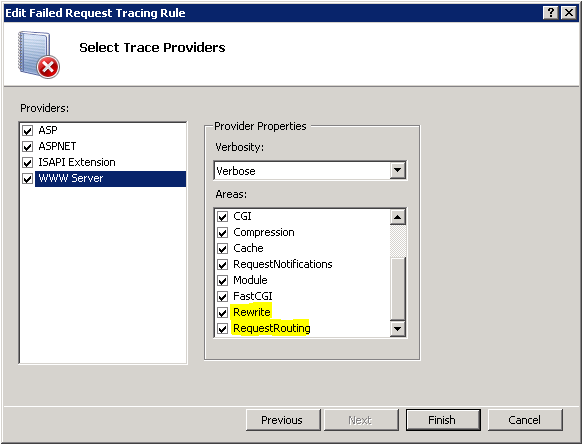

The 502.3 error can also appear when something else it happening, and when this occurs, it’s time to get into deep troubleshooting. The first thing to do is enable Failed Request Tracing in IIS on the ARR node, then create a rule for all content that trips on 502.3 response codes. It’s important to note that only certain modules have tracing enabled by default. To capture tracing information from the URL Rewrite and Application Request Routing module, open up your applicationHost.config file, and add Rewrite and Request elements to traceProviderDefinitions/WWW Server:

<traceProviderDefinitions>

<add name=”WWW Server” guid=”{3a2a4e84-4c21-4981-ae10-3fda0d9b0f83}”>

<areas>

<clear />

<add name=”Authentication” value=”2″ />

<add name=”Security” value=”4″ />

<add name=”Filter” value=”8″ />

<add name=”StaticFile” value=”16″ />

<add name=”CGI” value=”32″ />

<add name=”Compression” value=”64″ />

<add name=”Cache” value=”128″ />

<add name=”RequestNotifications” value=”256″ />

<add name=”Module” value=”512″ />

<add name=”FastCGI” value=”4096″ />

<add name=”Rewrite” value=”1024″ />

<add name=”RequestRouting” value=”2048″ />

</areas>

</add>

———————— Truncated for readability —————-

Ensure that when you are creating your rule, that the new provider areas of WWW Server are selected:

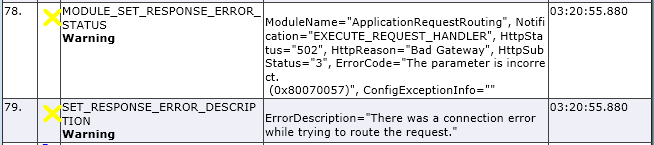

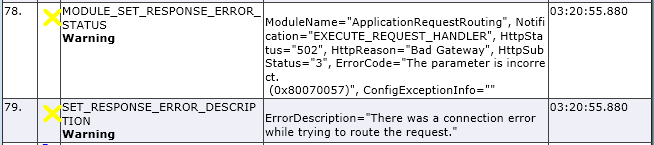

Once you’ve done that, attempt to reproduce the issue and a log file will be generated in the C:\inetpub\FailedReqLogFiles\W3SVC0 (where 0 is the site id). This file can help tell you where in the IIS pipeline the request is failing – look for warning or errors returned by modules. For example, here’s an example of a log file showing a 0x80070057 error from the ApplicationRequestRouting module:

The underlying error from the ARR module is “There was a connection error while trying to route the request.” So how do we find out what that means? Well, we need to look a little deeper into ARR to understand. ARR will proxy requests on behalf of the client to the content nodes. This means that the request from the client is actually regenerated into a new request by ARR and sent to the content node. Once the content node responds, ARR then repackages the response to send back to the client. To facilitate this, ARR uses the WinHTTP interface. In Server 2008 R2, you can enable WinHTTP tracing via netsh. Run this command to enable tracing:

netsh winhttp set tracing trace-file-prefix=”C:\Temp\WinHttpLog” level=verbose format=hex state=enabled

Then recycle the application pool to start logging. To disable tracing, run this command:

netsh winhttp set tracing state=disabled

You will find a log file in the C:\Temp directory named WinHttpLog-w3wp.exe-<pid>.<datetime>.log. Open this file and you will be able to see details of what ARR submitted to WinHTTP when generating the proxied request to send to the content node. You’ll want to search this file for the error mentioned in the Failed Request Tracing log. From the above example, you’ll see the error logged by ARR is 0x80070057 with an error message of “The parameter is incorrect.” Looking through our sample WinHTTP trace file, we find this:

15:15:51.551 ::WinHttpSendRequest(0x164d9a0, “…”, 696, 0x0, 0, 0, 164d740)

15:15:51.551 ::WinHttpAddRequestHeaders(0x164d9a0, “…”, 696, 0x20000000)

15:15:51.551 ::WinHttpAddRequestHeaders: error 87 ERROR_INVALID_PARAMETER]

15:15:51.551 ::WinHttpAddRequestHeaders() returning FALSE

15:15:51.551 ::WinHttpSendRequest: error 87 [ERROR_INVALID_PARAMETER]

15:15:51.551 ::WinHttpSendRequest() returning FALSE

15:15:51.551 ::WinHttpCloseHandle(0x164d9a0)

15:15:51.551 ::usr-req 0163D520 is shutting down

I replaced the actual header value with “…” in the sample above, but we can see that WinHTTP is failing when trying to put together the request headers to send the proxied request to the content node. Further investigation found that this was due to Internet Explorer passing unencoded non-ascii characters in the Referrer header which violates RFC 5987. To resolve this specific issue, we can either fix the source HTML to encode the characters, or we can modify the routing URL rewrite rule to always encode the Referrer header:

<rule name=”www.orcsweb.com“>

<match url=”.*” />

<serverVariables>

<set name=”HTTP_REFERER” value=”{UrlEncode:{HTTP_REFERER}}” />

</serverVariables>

<action type=”Rewrite” url=”http://www.orcsweb.com/{R:0}” />

</rule>