There are several reasons you may want to audit the data in your SQL databases. It’s helpful when trying to track down a bug in software, trying to piece together the source of unexpected data, or to meet compliance doctrines. Depending upon your needs, there are several ways to accomplish this. If you need auditing in order to be able to track and revert changes to data, trigger-based table auditing is probably best.

However, when you need to generate audit trails for compliance reasons, Microsoft’s SQL Server has a few built-in ways of doing so. C2 auditing is supported, however the amount of data generated makes this a non-viable option for most installations. Starting with SQL 2008, Microsoft introduced a new high-performance feature called SQL Server Audit. This functions at both the server level (included with all editions) and the database level (enterprise edition). This will allow you to audit a myriad of server and database level functions, and can help ensure your SQL footprint is in compliance.

SQL Server audit is composed of a few different functions. At the server level, you can define an auditing configuration that writes to a file, the NT Application Log, or the NT Security Log. Logging to a file has the least performance overhead and writing to the Security log requires special permissions. From there, you can configure Server level auditing, and/or Database level auditing. The auditing rules can be fine-tuned to apply to specific objects in the database and for specific user principals. For this example, I will configure auditing of Update and Delete events for all tables in a database for all users – a scenario that can effectively meet a requirement commonly known as File Integrity Monitoring or Change Detection for audit trails.

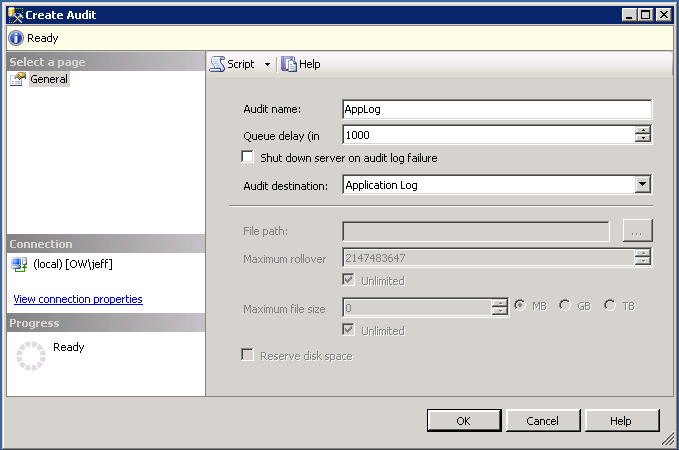

First, we’ll setup an auditing specification to log events the Application Log. Under Security, right-click Audits and select New Audit. Give the Audit an appropriate name and select Application Log as the destination. Click OK. Then right-click the Audit that is created and select enable.

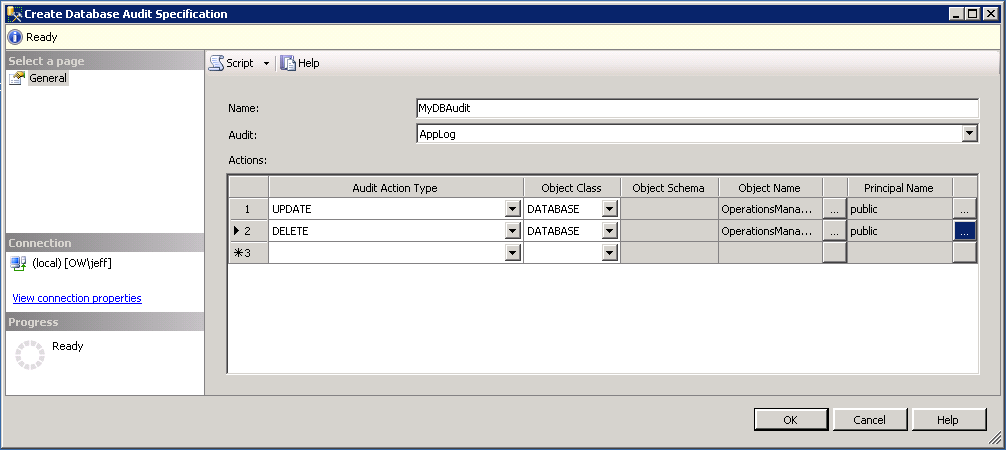

Next, go to the database you would like to audit. Under Security right-click Database Audit Specification and select New Database Audit Specification. Name the database audit and select the appropriate audit configuration (AppLog). Under Actions, select the actions you would like to audit, one at a time. Set the Object Class to Database, select the database in the Object Name column, and select the [public] database role in the Principal Name column to audit all users. Even though the pop-up window will allow you to select multiple items, each row can contain only 1 object and principal. Click OK. Then right-click the Database Audit that is created and select enable.

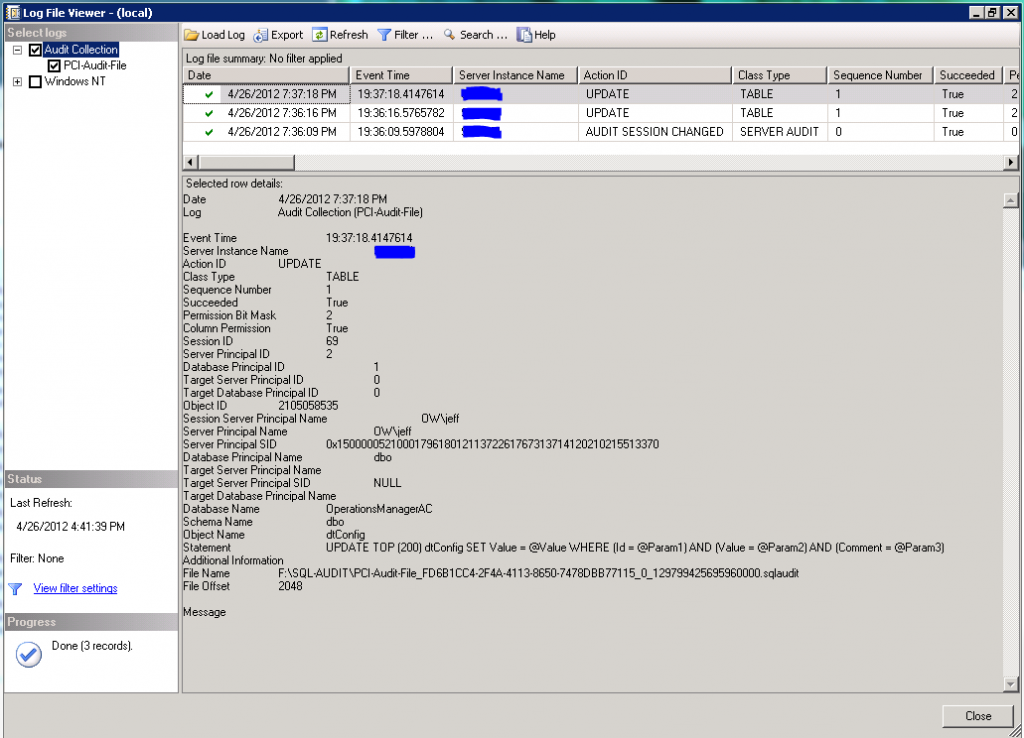

Once enabled, you can now test your audit configuration by updating or deleting database for any table in the audited database. Right-click on the audit specification at the server level and select View Audit Logs to see generated audit events.