We’re wrapping up a project that involved Windows Storage Spaces on Server 2012 R2. I was very excited to get my hands on new SSDs and test out Tiered Storage Spaces with Hyper-V. As it turns out, the newest technology in SSD drives combined with the default configuration of Storage Spaces is killing performance of VM’s.

First, it’s important to understand sector sizes on physical disks, as this is the crux of the issue. The sector size is the amount of data the physical disk controller inside your hard disk actually writes to the storage medium. Since the invention of the hard disk, sector sizes have been 512 bytes for hard drives. Many other aspects of storage are based on this premise. Up until recently, this did not pose an issue. However, with larger and larger disks, this caused capacity problems. In fact, the 512-byte sector is the reason for the 2.2TB limit with MBR partitions.

Disk manufacturers realized that 512-byte sector drives would not be sustainable at larger capacities, and started introducing 4k sector, aka Advanced Format, disks beginning in 2007. In order to ensure compatibility, they utilized something called 512-byte emulation, aka 512e, where the disk controller would accept reads/writes of 512 bytes, but use a physical sector size of 4k. To do this, internal cache temporarily stores the 4k of data from physical medium and the disk controller manipulates the 512 bytes of data appropriately before writing back to disk or sending the 512 bytes of data to the system. Manufacturers took this additional processing into account when spec’ing performance of drives. There are also 4k native drives which use a physical sector size of 4k and do not support this 512-byte translation in the disk controller – instead they expect the system to send 4k blocks to disk.

The key thing to understand is that since SSD’s were first released, they’ve always had a physical sector size of 4k – even if they advertise 512-bytes. They are by definition either 512e or 4k native drives. Additionally, Windows accommodates 4k native drives by performing these same Read-Modify-Write, aka RMW, functions at the OS level that are normally performed inside the disk controller on 512e disks. This means that if the OS sees you’re using a disk with a 4k sector size, but the system receives a 512b, it will read the full 4k of data from disk into memory, replace the 512 bytes of data in memory, then flush the 4k of data from memory down to disk.

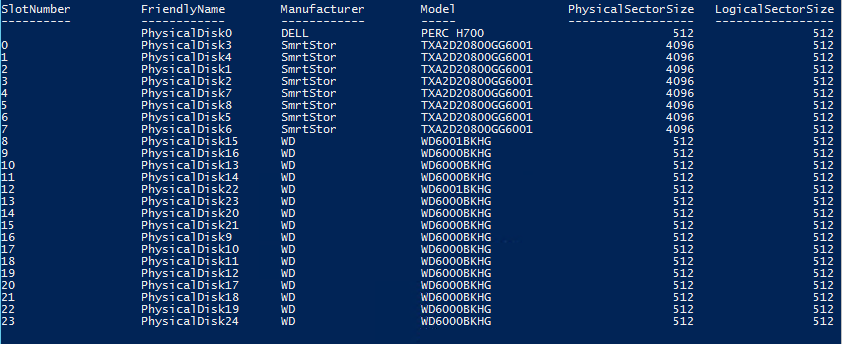

Enter Storage Spaces and Hyper-V. Storage Spaces understands that physical disks may have 512-byte or 4k sector sizes and because it’s virtualizing storage, it too has a sector size associated with the virtual disk. Using powershell, we can see these sector sizes:

Get-PhysicalDisk | sort-object SlotNumber | select SlotNumber, FriendlyName, Manufacturer, Model, PhysicalSectorSize, LogicalSectorSize | ft

Any disk whose PhysicalSectorSize is 4k, but LogicalSectorSize is 512b is a 512e disk, a disk with a PhysicalSectorSize and LogicalSectorSize of 4k is a 4k native disk, and any disk with 512b for both PhysicalSectorSize and LogicalSectorSize is a standard HDD.

The problem with all of this is that the when creating a virtual disk with Storage Spaces, if you do not specify a LogicalSectorSize via the Powershell cmdlet, the system will create a virtual disk with a LogicalSectorSize equal to the greatest PhysicalSectorSize of any disk in the pool. This means if you have SSD’s in your pool and you created the virtual disk using the GUI, your virtual disk will have a 4k LogicalSectorSize. If a 512byte write is sent to a virtual disk with a 4k LogicalSectorSize, it will perform the RMW at the OS level – and if you’re physical disks are actually 512e, then they too will have to perform RMW at the disk controller for each 512-bytes of the 4k write it received from the OS. That’s a bit of a performance hit, and can cause you to see about 1/4th of the advertised write speeds and 8x the IO latency.

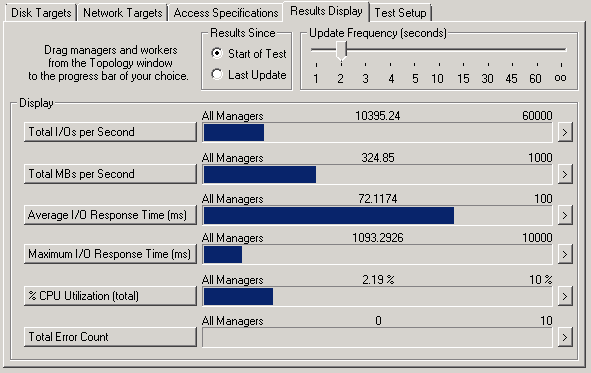

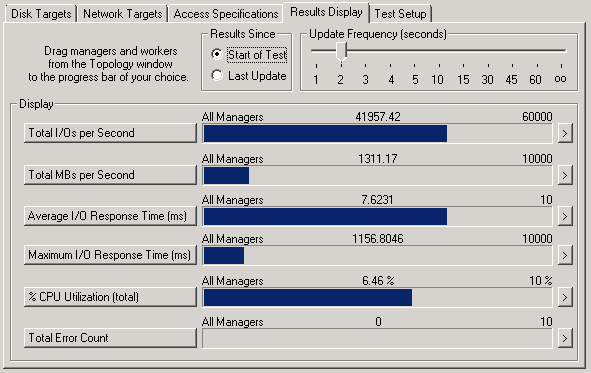

Why this matters with Hyper-V? Unless you’ve specifically formatted your VHDx files using 4k sectors, they are likely using 512-byte sectors, meaning every write to a VHDx storage on a Storage Spaces virtual disk is performing this RMW operation in memory at the OS and then again at the disk controller. The proof is in the IOMeter tests:

32K Request, 65% Read, 65% Random

Virtual Disk 4k LogicalSectorSize

Virtual Disk 512b LogicalSectorSize

What is the solution to this problem?

Very interesting! I thought i would check my own setup, but apparently my HDDs AND my SSDs report they are both physically and logically 512b. But to my surprise every storage pool is set to 512 logical and 4k physical.

So why the 4k physical? and wouldn’t that give a problem similar to what you describe (just the other way around)? The processor having to convert every 512b operation to 4k and then back again when hitting the physical disk?

Very interesting discovery! Very useful to know in case dealing with 512 physical / 512 logical HDDs.

I created a storage tier from a pool with 2012 R2 that includes a 250GB SSD and 3TB WD RED. The LogicalSectorSize is 512 which matches my BOOT SSD (also 250GB).

CrystalDiskMark shows the tiered pool exceeding the BOOT SSD in all tests.

Also, I can’t find any reference to how a LogicalSectorSize can be defined in the link to New-VirtualDisk. Can you clarify your results and explain the optimum sector size please?

The sector size is set on the pool using LogicalSectorSizeDefault

Pingback: Shared VHDX on Storage Spaces - Do you IT?

The new-virtualdisk cmdlet doesn’t let me specify the logical sector size. How do you specify a LogicalSectorSize for a virtual disk in a storage pool? Did you mean to link to the new-vhd cmdlet instead?

LogicalSectorSizeDefault is set using the New-StoragePool cmdlet.

Hi,

How did you format your virtual disks with 512b logical sector sizes?

I have looked at the new-virtualdisk cmdlet but it does not appear to have any option to specify this.

There is the ability to specify a default logical sector size when creating a new storage pool. Is this how you managed to do this?

The sector size default is specified on the pool, not the virtual disk using LogicalSectorSizeDefault:

https://technet.microsoft.com/en-us/library/hh848689.aspx

I have a GUI workaround to get the LogicalSectorSizeDefault set to 512, that may also work for you if you have some 512 disks in your JBOB. Create your Storage Pool with only the 512 disk selected. Then add the SSD’s after using the add Physical Disk feature.

I was having trouble getting the powershell command just right.

Hi Jeff,

Thanks for confirming that it is set using LogicalSectorSizeDefault.

Robert>

Are you saying that if I have a storage pool already with 512b disks then adding SSD’s after will continue to create 512b virtual disks?

No. This gets set when first creating the storage pool. Adding SSDs after should not impact the logical sector size.

Hi, very interesting!

But I can’t create pool by PS, but in GUI I can. Error – “One of the physical disks specified is not supported by this operation”.

Do you know about it ?

Sorry, the question is closed. Need use “-StorageSubsystemFriendlyName “Clustered Storage Spaces*””

How about “PhysicalSectorSize” in pool ? I always get 4096, but not 512

Do you mind elaborating on what IOMeter settings you used in order to obtain these benchmark differences? I setup a similar comparison on our tiered storage spaces and obtained very similar results regardless of the logical sector size of the storage pool, contrary to what you had found.

32K Request, 65%, 65% Random

Hi!

Did you do the IOmeter on the virtual Disk directly or within a VM? I did some tests too, but can not confirm the performance impact found here. I have a mix of 4K and 0,5k drives and testesd with pools of 4k and 0,5k size – no difference.

Robert, Jeff,

I am confused re the solution as some of my drive are Advanced Format, 512e so very confused. Can you confirm that the solution is as follows for the non expert among us.

1. The storage space is on the host. Check the physical size with Get-PhysicalDisk before creating space

2.Create the pool with hdd only with 512k size if that is hdd default

3.Add the ssd via the gui after create of space above this forces ssd to use 512K (is this correct)

3. If HDD is 4k default then dont mix this with hdd 512K or create 512k first as above

4. if HDD is 4k physical ok with ssd as this is also 4k

Thanks

You’ll need to add ALL of the disks to the storage server BEFORE creating the pool. Then use the Get-PhysicalDisk command to check the physical and logical sector sizes. If any of them have a logical sector size of 512, set your storage pool logical sector size to 512 – the disks using 512e can already handle the RMW operation in firmware. If ALL of your disks are 4k logical sector size, you’ll need to set the storage pool to 4k and ensure your virtual disks are using 4k sectors.

So are you recommending that the storagpool/virtualdisk be created with 512 for VMs?

PS C:\Windows\system32> get-virtualdisk -friendlyname VD5 | select LogicalSectorSize

LogicalSectorSize

—————–

512

Let me clarify: To avoid RMW penalty when using tiered storage, then the SP was created using just the HDDs (512b) and then add the SSDs (4k) resulting in a native 512b SP. Then creating the VD which is created in 512b.

Pingback: Microsoft Storage Spaces 2016: Storage Tiering NVMe + SSD Mirror + HDD Parity – Get-SysadminBlog

Pingback: True Cloud Storage – Storage Spaces Direct | Jeff's Blog

Why do you test with

32K Request, 65% Read, 65% Random?

https://technet.microsoft.com/en-us/library/dn894707(d=printer,v=ws.11).aspx?f=255&mspperror=-2147217396

That’s based on the profile that was developed from capturing traffic of actual VM workloads. Each application has different IO patterns, and as a service provider, we needed an “average” profile to determine how many customers can be supported by the underlying hardware.

Hi Jeff

so it makes sense then to always use 4k sector VHDX as the basis for storage spaces, then they align perfectly on 512 block size drives as well

Would that work?

Yes, as long as your guests support 4k sector size, it’s the recommended configuration. We had to support down-level OSes that did not recognize 4k sector size on OS disk.

Keep in mind that if at a later point in time you install a native 4k disk, then you need to create the virtual disk with a logicalsectorsize of 4k

So my SSDs have a physical and logical sector size of 512, and my HDDs have physical and logical sector size of 4K. I have created tiered virtual disks and they have a Logical Sector Size of 4K. Will I be affected by the RMW penalty?

Great article.

You might consider changing the title from: SSD’s on Storage Spaces are killing your VM’s performance

to something like: Misconfigured SSD’s on Storage Spaces are killing your VM’s performance

that’s cool. can I get a tutorial on your virtual disks format with 512b logical sector sizes?

This doesn’t seem to be true anymore, at least with Windows Server 2019. Here is my test:

* I have a bunch of SSDs (128GB) and HDDs (3-4TB). So far, the Get-PhysicalDisk cmdlet shows all the SSD with both physical and logical sectors of 512 B, and all the HDDs with physical sectors 4 KB and logical sectors 512 B.

* When I create a storage pool through the GUI, no matter which disks I select (HDD only, SSD only, a mix of HDD and SSD), I get a storage pool with physical sector of 4 KB and logical sector of 512 B. Again – even if I select SSD only, which was shown as physical AND logical sectors of 4 KB.

* After that, I create a virtual disk through the GIU (with no option of setting the sector size) and I get (again) physical sector of 4 KB and logical sector of 512 B.

According to this article (https://docs.microsoft.com/en-us/windows-server/administration/performance-tuning/role/hyper-v-server/storage-io-performance#sector-size-implications), MS have done some changes in WS2016. Probably they are related to those results?

And finally, all that said – do we need to think (now) about setting the virtual disk’s logical sector size? Or we can create our storage topology, just making sure that on every level (storage pool, virtual disk) the logical sector size is indeed 512 B (unless all our physical disk drives REPORT their physical sector size as 4 KB) and then making sure that the VHDX files are created as 4 KB (so that they are “prepared” for being moved to a storage system with native 4 KB physical sector size?

On my previous comment I see a typo. The SSDs indeed show themselves as 512 B, both physical and logical sectors. So, the line “Again – even if I select SSD only, which was shown as physical AND logical sectors of 4 KB.” should be correctly read as “Again – even if I select SSD only, which was shown as physical AND logical sectors of 512 B.”

The logic for creating a virtual disk could very well have changed with Server 2019 or even updates to Server 2016. All of my testing was actually done with 2012 R2. Also, performance issues were most prevalent with legacy OSes (Windows Server 2003) and dynamic disks. The key thing is to understand your workload and make sure you’ve optimized storage for that workload. Effectively, you want to avoid the overhead of RMW where possible.

Hi Jeff, thanks for the answer! I understand the reasoning, just trying to figure out the best approach. My idea is the following – you have the following layers:

a) VHDX file system cluster size

b) VHDX logical sector size

c) VHDX physical sector size

d) Storage Spaces Virtual Disk file system cluster size

e) Storage Spaces Virtual Disk sector size

f) Physical Disk logical sector size (512 b for 512e drives)

g) Physical Disk physical sector size (4096 b for 512e drives)

In this example, the lowest two (f and g) are set and you cannot change them. All the above can be changed during setup of the respective layer/component. So, does it make sense to go the following way: since we know the services in the VM are working on file system level and don’t need low-level access (like any typical business application would work – SQL, Exchange, File services, etc.; I am explicitly excluding backup and dedup services, as those might interfere on lower level with the (virtual) hardware), those services will work with 4-64KB chunks of data. So, if we just set all the layers above f) to at least 4096 bytes, is there RMW occurring? Like the example below:

a) VHDX file system cluster size (ReFS 4 KB, as recommended by MS)

b) VHDX logical sector size (4 KB)

c) VHDX physical sector size (4 KB)

d) Storage Spaces Virtual Disk file system cluster size (ReFS 4 KB)

e) Storage Spaces Virtual Disk sector size (4 KB)

f) Physical Disk logical sector size (512 b for 512e drives)

g) Physical Disk physical sector size (4096 b for 512e drives)

I wonder whether the physical machine OS (the only one that needs to work with drives with sectors smaller than 4096 bytes and the one that operates the Storage Spaces) will do a RMW or it’s not needed, since all chunks it gets from the top are all 4 KBs? And when it needs to put those on the physical disk (through the Storage Spaces subsystem) it will just pass the 4 KB write to the physical disk controller and let it handle it by itself?

In my testing, the only thing that really had a significant performance impact was ensuring that e and f matched. If the logical sector size of the physical disk was 512b, but the Storage Space logical sector size was 4K, then the OS performed RMW for every disk operation. The if the physical hard disk is 512e then it has already accounted for RMW in hardware in terms of performance specifications.